Earlier this week we had a look at Gainward's Ultra/3500PCX XP Golden Sample - an overclocked GeForce 7800 GTX. Without giving too much away to anyone who hasn't read the full review; it happens to be one of the fastest video cards around that comes coupled with an awesome retail price. That combination makes it one of the most desirable video cards available for purchase today.

Today, NVIDIA announce the second addition to the GeForce 7 series: the GeForce 7800 GT. As with the launch of GeForce 7800 GTX; NVIDIA are hard launching this video card - and by the time you have finished reading this review, you should be able to make your purchase at your favourite online retailer.

Rather than a typical reference card review, we have a two matched pairs of retail GeForce 7800 GT's that you can go out and buy later today that we've put through their paces in the labs over the last few days. Before we have a look at the retail cards, we'll take a quick look at what is underneath the hood of GeForce 7800 GT, and how it differs from GeForce 7800 GTX.

You can, of course, achieve HDR on ATI's current Shader Model 2.0 video cards, as we showed you at the end of last week in our Splinter Cell: Chaos Theory patch 1.04 analysis - what NVIDIA really mean is that they're able to achieve limitless High Dynamic Range lighting under the guise of GeForce 6 and 7 series being capable of 16-bit floating point blending.

It is believed that ATI's upcoming R5xx series GPUs will have support for 16-bit floating point blending - those GPUs are still to be announced at the moment. It's also pretty reasonable to assume that the R5xx series GPUs will be fully Shader Model 3.0 compliant, even though it is debatable whether there is any great difference in image quality between shader programs written in Shader Model 2.0 and Shader Model 3.0.

It is also worth mentioning that shaders aren't long enough to make Shader Model 2.0 obsolete any time soon. However, it will happen at some point down the line when shader programs get so complex that they're too long to be written to conform with the capabilities of Shader Model 2.0 hardware. There is also the factor of limited budgets in games development - Shader Model 3.0 is considerably more efficient than Shader Model 2.0 from a code perspective, so developers are moving towards Shader Model 3.0 in order to save time and money spent on developing upcoming titles.

An example of this was Splinter Cell: Chaos Theory; it shipped with only two Shader paths - Shader Model 1.1 and Shader Model 3.0 - this meant that all of ATI's current generation hardware defaulted to Shader Model 1.1, resulting in a clear image quality advantage to NVIDIA's GeForce 6-series video cards. It was only last week that there was a Shader Model 2.0 patch released - some three or four months after the launch of the title.

Also, ATI have got their own dual-GPU platform which is currently in the final development stages. It's known as CrossFire, and we covered the basics of the CrossFire platform back at the start of June. That is yet to ship, but we are expecting CrossFire motherboard samples as we speak. Also, we understand that CrossFire Edition video cards are also pretty close too - they should be shipping at the end of August if everything goes to plan.

Are you beginning to spot a trend...?

Today, NVIDIA announce the second addition to the GeForce 7 series: the GeForce 7800 GT. As with the launch of GeForce 7800 GTX; NVIDIA are hard launching this video card - and by the time you have finished reading this review, you should be able to make your purchase at your favourite online retailer.

Rather than a typical reference card review, we have a two matched pairs of retail GeForce 7800 GT's that you can go out and buy later today that we've put through their paces in the labs over the last few days. Before we have a look at the retail cards, we'll take a quick look at what is underneath the hood of GeForce 7800 GT, and how it differs from GeForce 7800 GTX.

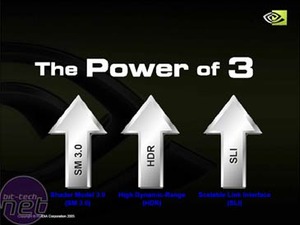

The Power of 3:

There has been some discussion in our video card forum recently about the banner that has been on NVIDIA's website for the last week or so. NVIDIA are launching a new marketing campaign to market the technologies that they have - and ATI don't currently have - in shipping hardware. Not surprisingly, that means NVIDIA are marketing the fact that they have Shader Model 3.0, HDR technology (we'll come on to that one in a second), and SLI or dual GPU capabilities.You can, of course, achieve HDR on ATI's current Shader Model 2.0 video cards, as we showed you at the end of last week in our Splinter Cell: Chaos Theory patch 1.04 analysis - what NVIDIA really mean is that they're able to achieve limitless High Dynamic Range lighting under the guise of GeForce 6 and 7 series being capable of 16-bit floating point blending.

It is believed that ATI's upcoming R5xx series GPUs will have support for 16-bit floating point blending - those GPUs are still to be announced at the moment. It's also pretty reasonable to assume that the R5xx series GPUs will be fully Shader Model 3.0 compliant, even though it is debatable whether there is any great difference in image quality between shader programs written in Shader Model 2.0 and Shader Model 3.0.

It is also worth mentioning that shaders aren't long enough to make Shader Model 2.0 obsolete any time soon. However, it will happen at some point down the line when shader programs get so complex that they're too long to be written to conform with the capabilities of Shader Model 2.0 hardware. There is also the factor of limited budgets in games development - Shader Model 3.0 is considerably more efficient than Shader Model 2.0 from a code perspective, so developers are moving towards Shader Model 3.0 in order to save time and money spent on developing upcoming titles.

An example of this was Splinter Cell: Chaos Theory; it shipped with only two Shader paths - Shader Model 1.1 and Shader Model 3.0 - this meant that all of ATI's current generation hardware defaulted to Shader Model 1.1, resulting in a clear image quality advantage to NVIDIA's GeForce 6-series video cards. It was only last week that there was a Shader Model 2.0 patch released - some three or four months after the launch of the title.

Also, ATI have got their own dual-GPU platform which is currently in the final development stages. It's known as CrossFire, and we covered the basics of the CrossFire platform back at the start of June. That is yet to ship, but we are expecting CrossFire motherboard samples as we speak. Also, we understand that CrossFire Edition video cards are also pretty close too - they should be shipping at the end of August if everything goes to plan.

Are you beginning to spot a trend...?

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.